The Ethics of AI – Laws and Rules related to AI and Robotics

The Ethics of AI

The ethics of AI, or artificial intelligence, aim to define the principles and standards that guide the development, use and impact of this technology on society and individuals.

Here are some key principles of AI ethics:

Transparency: AI systems should be designed so that their decisions and actions are understandable and explicable to users and stakeholders.

Accountability: AI designers and users must be accountable for the actions of these systems. This includes considering potential consequences and taking steps to mitigate risks.

Fairness and non-discrimination: AI should not be biased or favor one group of people over another. It should be fair and respect the fundamental rights of all individuals.

Privacy: AI systems must respect users’ privacy and data confidentiality. Personal information must not be misused or used without appropriate consent.

Security: AI developers must implement robust security measures to protect systems against potential attacks and abuse.

Consent and autonomy: Individuals must have control over the use of their data, and be able to make informed decisions about the use of AI that affects them.

Social and environmental impact: AI should be developed and used in a way that promotes social, economic and environmental well-being, while avoiding negative effects on society and the planet.

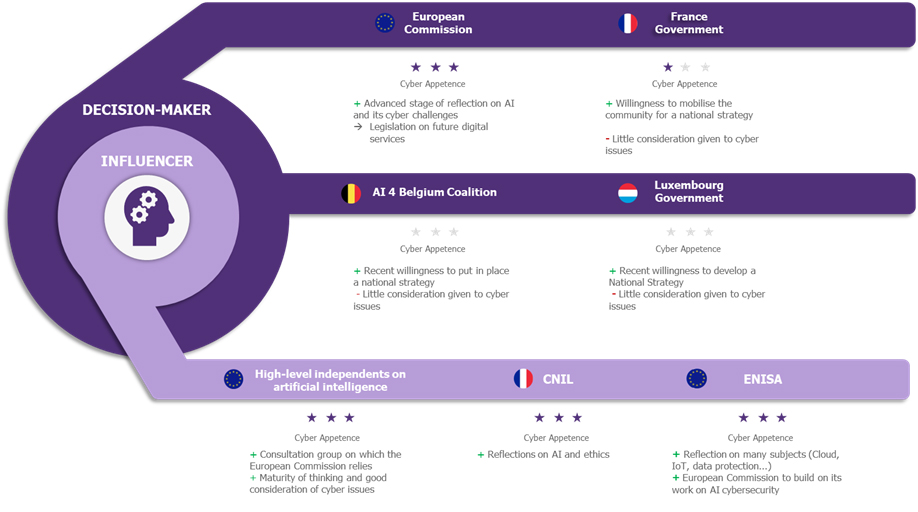

International collaboration: Cooperation between national and international players is essential to develop common standards and ethical principles in the development and use of AI.

AI ethics are constantly evolving in response to the emerging challenges associated with this technology. It is crucial that industry players, researchers, policymakers and civil society work together to promote responsible and ethical AI, in order to reap its full benefits while minimizing potential risks.

« Black box » artificial intelligence (AI) systems

« Black box » artificial intelligence (AI) systems refer to AI models or algorithms that make decisions or predictions without providing a clear explanation or understanding of how they arrived at those conclusions.

These systems present several ethical challenges, including:

- Lack of transparency: The opacity of black box AI systems makes it difficult for users, regulators, and even developers to understand the reasoning behind their decisions.

This lack of transparency can lead to mistrust and challenges in ensuring accountability for AI-driven outcomes. - Bias and discrimination: Black box AI systems can inadvertently perpetuate bias and discrimination present in the data used for training. If the decision-making process is not transparent, it becomes challenging to identify and address these biases, potentially leading to unfair or discriminatory outcomes for certain groups.

- Accountability and responsibility: When an AI system’s decision-making process is not transparent, it becomes challenging to assign responsibility for its actions, especially in cases where the system makes harmful or unethical decisions.

- Impact on human rights: AI systems that lack transparency and may produce biased outcomes have the potential to violate individuals’ human rights, such as privacy, freedom of expression, and non-discrimination.

- Lack of explainability: The inability to explain AI systems’ decisions may hinder their acceptance in critical applications like healthcare, finance, and legal domains, where users need to understand the reasoning behind the system’s choices.

- Trust and adoption: Lack of transparency and explainability can lead to a lack of trust in AI systems, limiting their adoption in various sectors and hindering their potential to benefit society.

- Regulatory challenges: The opacity of black box AI systems can pose challenges in terms of regulatory compliance, as regulators may struggle to assess whether these systems adhere to ethical standards or legal requirements.

Addressing these ethical challenges requires developing more transparent and explainable AI systems. Research into explainable AI, fairness-aware algorithms, and interpretability techniques aims to make AI systems more understandable and accountable, thus mitigating potential harms and ensuring their ethical use in various domains.

Additionally, establishing guidelines and regulations for AI development and deployment can help create a framework that prioritizes transparency, fairness, and human rights in AI systems.

Resolving the trust issues associated with AI

Resolving the trust issues associated with AI is of utmost importance, both in the present and for potential future scenarios.

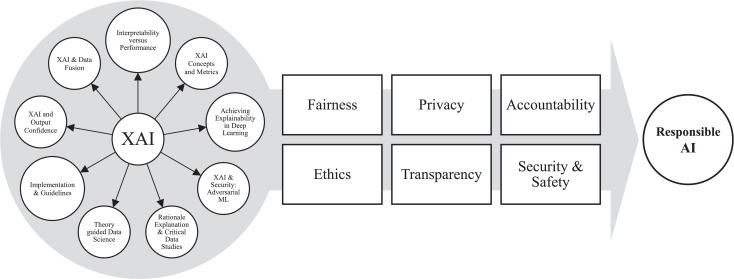

As the field of Explainable AI (XAI) continues to grow, it offers a promising path to prevent dystopian outcomes that some AI skeptics fear.

The ability to explain how AI systems make decisions becomes indispensable in avoiding situations akin to the fictional AI villains like HAL 9000 or Skynet, where machines break free from human control and cause havoc.

Explainable AI provides a breath of fresh air by bridging the gap between human understanding and AI decision-making.

It allows humans to gain insights into the decision-making process of AI algorithms, enhancing transparency and accountability.

By shedding light on the internal workings of AI, XAI instills a sense of trust and confidence in the technology, addressing the concerns surrounding black box systems.

This progress in XAI brings essential relief by promoting responsible AI development and deployment.

It empowers users, policymakers, and stakeholders to scrutinize and validate AI outcomes, ensuring that they align with ethical and legal standards. By embracing Explainable AI, we can build a future where AI is a valuable and beneficial tool, working collaboratively with humans to tackle complex challenges while maintaining human oversight and control.

AI stands for Artificial Intelligence

AI stands for Artificial Intelligence, a field of computer science that aims to create computer systems capable of performing tasks normally requiring human intelligence.

These systems can perform actions such as voice recognition, computer vision, decision making, machine learning and more.

XAI stands for Explainable Artificial Intelligence

XAI stands for Explainable Artificial Intelligence. It’s an approach that aims to make AI systems more transparent and comprehensible to human beings.

Traditional AI systems are often seen as « black boxes« , as they make complex decisions without us being able to understand how they made them.

With Explainable AI, AI designers seek to make the decision-making processes of algorithms more explainable, enabling users and experts to understand how and why a specific decision was made by the AI.

This transparency is crucial to building trust in the use of AI, and to ensuring that decisions made by AI systems are ethical, fair and responsible.

Controlling AI development and decision-making

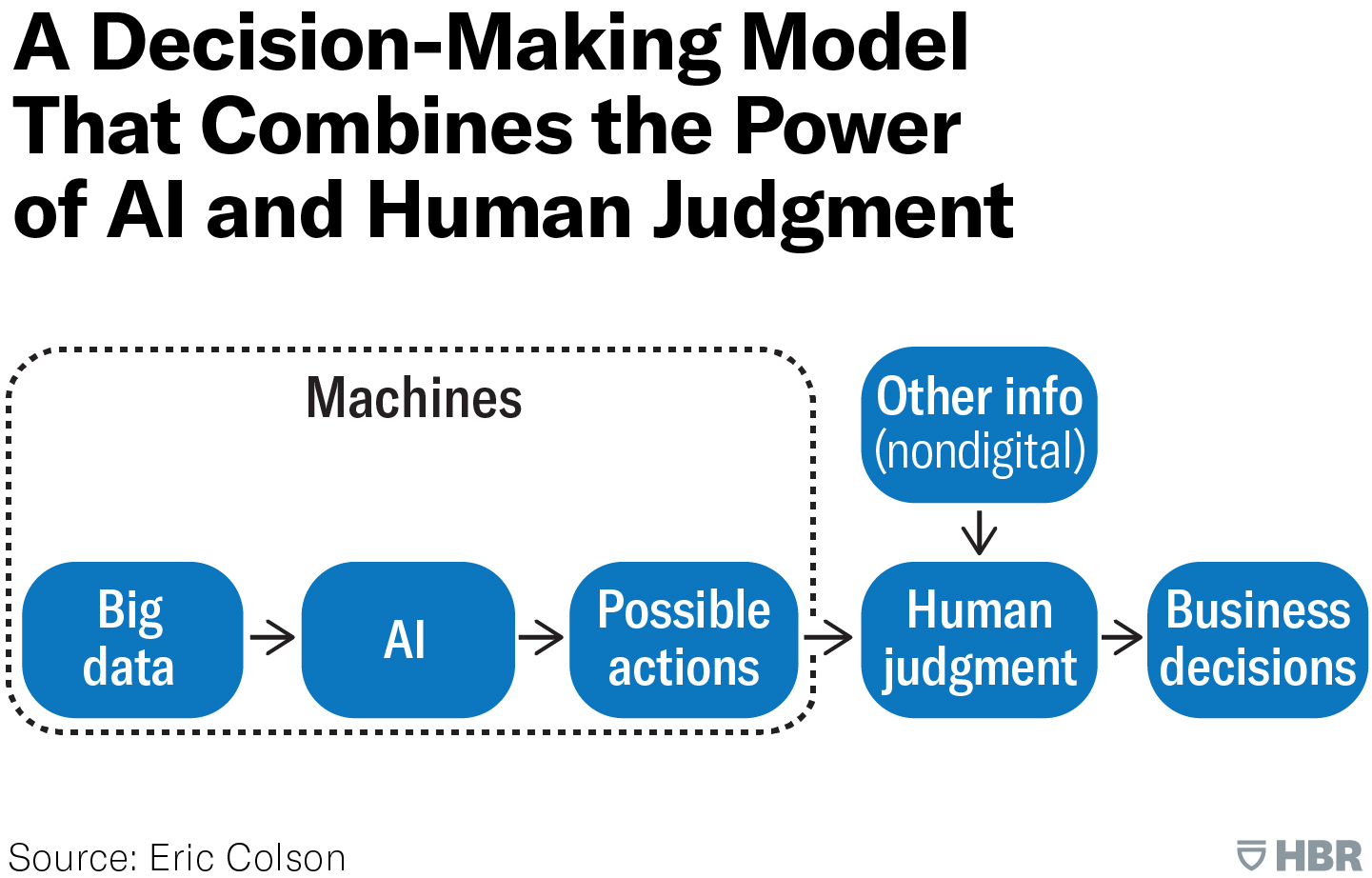

Controlling AI development and decision-making is crucial to ensure that AI systems are aligned with human values, ethical standards, and societal needs.

Here are some key strategies to enable human control over AI:

- Ethical Guidelines and Regulations: Establish clear ethical guidelines and regulatory frameworks for AI development and deployment. These guidelines should outline the principles and values that AI systems should adhere to, such as transparency, fairness, accountability, and respect for human rights.

- Explainable AI (XAI): Promote research and development of explainable AI techniques. XAI allows AI systems to provide understandable explanations for their decisions, enabling humans to comprehend and verify the reasoning behind AI-driven outcomes.

- Human-in-the-Loop Systems: Incorporate human oversight in AI systems through human-in-the-loop approaches. This involves human experts being actively involved in the decision-making process, either by providing input or reviewing and validating AI-generated decisions.

- Bias Detection and Mitigation: Implement mechanisms to detect and address biases in AI algorithms during their development. Regular audits and bias assessments should be conducted to ensure that AI systems do not discriminate against specific groups or perpetuate societal biases.

- Impact Assessments: Conduct comprehensive impact assessments before deploying AI systems. Evaluate the potential social, economic, and ethical implications of AI applications to understand their consequences on individuals and communities.

- Data Governance: Establish robust data governance practices to ensure the responsible and ethical collection, storage, and usage of data in AI systems. Protecting individuals’ privacy and data rights is essential to maintain human control over AI.

- Human Values by Design: Integrate human values and ethical considerations into the design process of AI systems. Involve diverse stakeholders, including ethicists, social scientists, and affected communities, to ensure that AI development is aligned with human needs and values.

- Continuous Monitoring and Evaluation: Continuously monitor AI systems in real-world scenarios and evaluate their performance and impact.

Regular audits and feedback loops allow for necessary adjustments and improvements to be made based on human oversight. - Public Engagement and Education: Engage the public in discussions about AI‘s development, deployment, and potential implications. Educate individuals about AI technologies, their benefits, and their risks, empowering them to make informed decisions and participate in shaping AI policies.

- Multidisciplinary Collaboration: Foster collaboration between different fields, including technology, ethics, law, and social sciences.

Multidisciplinary perspectives are vital for developing comprehensive approaches to human-controlled AI.

By implementing these strategies, we can strike a balance between the autonomy and capabilities of AI systems while maintaining human control and ensuring that AI technologies are aligned with human values and serve the greater good.

AI development algorithm

To control the AI development algorithm, it is essential to follow ethical, transparent and responsible practices throughout the process. Here are some key steps to controlling the AI development algorithm effectively:

Define clear objectives: Before starting development, it’s essential to clearly define the AI‘s objectives and the problems it is intended to solve. Objectives must be aligned with ethical values and human needs.

Transparency and traceability: Ensure that the algorithm development process is transparent and traceable. Document all stages of development, including design choices, datasets used, and parameters selected.

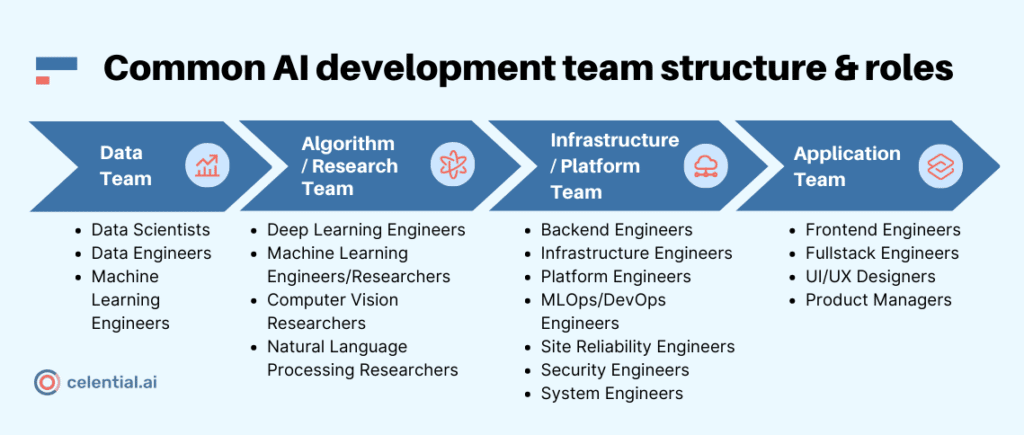

Diversity of development teams: Make sure you have a diverse development team including AI experts, ethicists and application domain specialists. Diverse perspectives ensure balanced decision-making.

Ethical assessment: Carry out ethical assessments throughout the development process. Identify and examine the potential ethical implications of AI and take steps to mitigate risks.

Bias and fairness: Pay attention to bias in data and algorithms. Put mechanisms in place to detect, evaluate and mitigate bias to ensure equity and inclusion in AI outcomes.

Accountability: Establish clear guidelines for accountability in AI development. Define the roles and responsibilities of each team member to ensure responsible decision-making.

Testing and validation: Carry out rigorous testing and cross-validation to assess the algorithm’s performance and reliability. Make sure the AI works properly and meets the defined objectives.

Data protection and privacy: Take care to protect the data used to train the AI and to respect users’ privacy. Use advanced security methods to prevent unauthorized access.

Audit mechanisms: Set up audit mechanisms to continuously monitor the AI algorithm once it has been deployed. This enables any undesirable behavior to be detected quickly and corrective action taken.

Stakeholder engagement: Involve relevant stakeholders throughout AI development. Listen to their concerns and factor their feedback into the decision-making process.

Stakeholder engagement: Involve relevant stakeholders throughout AI development. Listen to their concerns and incorporate their feedback into the decision-making process.

By following these steps, it is possible to effectively control the AI development algorithm and ensure that it is aligned with ethical values, transparent, and meets society’s needs and expectations.

Artificial intelligence (AI) algorithms

Artificial intelligence (AI) algorithms can vary depending on the type of AI model used and the specific task to be accomplished. There are different types of AI algorithms, each tailored to particular problems.

Here are a few examples of algorithms commonly used in AI:

Artificial neural networks (ANNs): Neural networks are inspired by the workings of the human brain. They are used for deep learning and can be used for tasks such as image recognition, natural language processing, prediction, etc.

Genetic algorithms: Genetic algorithms are used to solve optimization problems, inspired by the processes of natural selection and evolution.

Decision trees: Decision trees are used for classification and decision-making. They are particularly useful when data is structured as a tree with decision nodes and result leaves.

Support vector machines (SVM): SVMs are used for classification and regression, and are particularly useful for separating data sets linearly or non-linearly.

K-nearest neighbors (K-NN): K-NN

K-nearest neighbors (K-NN): K-NN is used for classification and pattern recognition based on the closest examples in the feature space.

Reinforcement learning algorithms: These algorithms are used for automated learning through interaction with an environment, generally to solve sequential decision-making problems.

Clustering algorithms: Clustering algorithms are used to group similar data into clusters, which is useful for data mining and segmentation.

Natural language processing (NLP) algorithms: These algorithms are used to understand and process human language, including speech recognition, machine translation and automatic summarization.

It’s important to note that these examples only cover a fraction of the many AI algorithms in existence. Each algorithm has its own characteristics, advantages and limitations, and the choice of algorithm depends on the specific problem to be solved and the data available. In addition, new AI algorithms are constantly being developed and improved as research in this field progresses.

Laws and regulations to frame the use of Artificial Intelligence (AI)

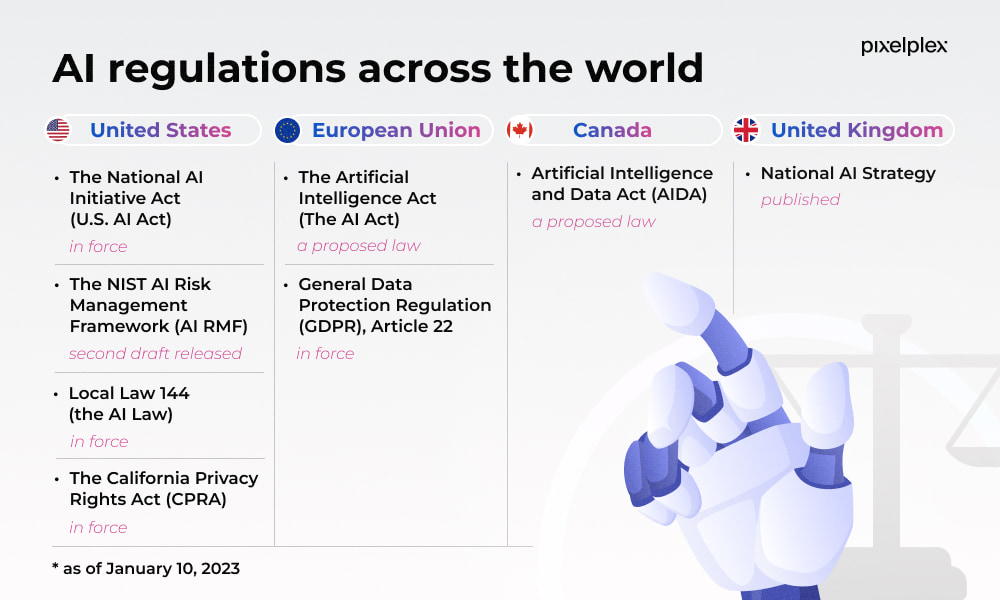

Indeed, humans have put in place laws and regulations to frame the use of Artificial Intelligence (AI) and robotics.

These regulations aim to ensure that AI and robots are developed and used ethically, responsibly and safely.

Here are some examples of laws and rules related to AI and robotics:

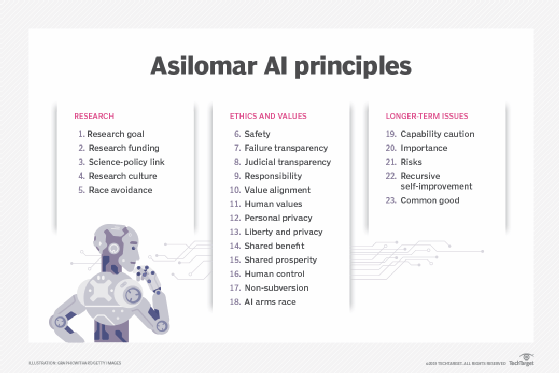

Ethical rules in research and development: Many government agencies and international organizations have established ethical principles to guide AI research and development. These principles often include notions such as transparency, accountability, fairness and safety.

Privacy and data protection regulations: Since AI often processes large amounts of data, it is essential to protect users’ privacy and data security. Laws such as the General Data Protection Regulation (GDPR) in Europe have been passed to ensure that data is used legally and ethically.

Liability and accountability laws: In some cases, it can be difficult to determine who is responsible in the event of error or damage caused by AI or a robot.

Civil and criminal liability laws have been established to assign responsibility for AI-related incidents.

Autonomous weapons laws: For robots and autonomous weapons systems, some countries have adopted laws prohibiting or regulating their use to avoid the risk of human rights and international security violations.

Safety standards: In some industries, specific safety standards have been put in place to ensure that robots and AI systems are developed and used safely, particularly in areas where they interact closely with humans.

However, it is important to note that laws and regulations relating to AI and robotics vary from country to country, and are evolving rapidly to meet the emerging challenges posed by these technologies.

The supervision of AI and robotics remains a constantly evolving field and requires ongoing monitoring to ensure their responsible and socially beneficial use.

The Three Laws can be summarized as follows:

- First Law A robot must not harm a human being, nor, through inaction, allow harm to come to a human being.

- Second Law A robot must obey the orders given by human beings, except when such orders would conflict with the First Law.

- Third Law A robot must protect its own existence, as long as such protection does not conflict with the First or Second Law.

Laws and regulations relating to Artificial Intelligence (AI)

Laws and regulations relating to Artificial Intelligence (AI) and robotics can vary from country to country. Here are some examples of relevant legislative and regulatory texts in certain countries:

General Data Protection Regulation (GDPR) – European Union: Adopted in 2018, the GDPR governs the protection of personal data of European Union citizens and also applies to companies that process such data.

Artificial intelligence law – France: France adopted an artificial intelligence law in 2021, which aims to frame the development, use and deployment of AI in France. This law emphasizes transparency, ethics and responsibility.

Personal Data Protection Act – USA: In the USA, there is no specific federal law on AI, but laws do exist to govern the protection of personal data, such as the California Consumer Privacy Act (CCPA) in California.

Directive on robots and artificial intelligence – European Union: The European Union is considering adopting a directive on robots and AI to govern the ethical, legal and safety aspects of robots and AI systems.

Ethical principles of AI – Organisation for Economic Co-operation and Development (OECD): The OECD has developed guiding principles on artificial intelligence, which aim to promote ethical, safe and responsible AI.

It is important to note that the field of AI and robotics is constantly evolving, and new legislation and regulations may be adopted over time to address technological challenges and advances. It is therefore advisable to refer to the specific laws and regulations of the country concerned for the most up-to-date information.